ML Algorithm Explained: Support Vector Machines

- By AIPI3 Machine Learning Team

Support Vector Machine (SVM) is a supervised machine learning algorithm, which predicts the class of a given point by creating a hyperplane that separates data into classes. It is used primarily for classification tasks but can be used for regression as well. SVM has many applications such as handwriting recognition, facial analysis, image classification, and much more.

Advantages:

- It performs well when in high-dimensional spaces.

- It is effective for both linear and non-linear problems

- It has good accuracy and requires less processing capability compared to other algorithms.

Disadvantages:

- It is not suitable for large volumes of data and noisy data with overlapping amongst classes.

- It underperforms when the number of features exceeds the number of training data points.

In this blog post, we provide an overview of the SVM algorithm with an example of algorithm implementation with code.

Basic Concepts

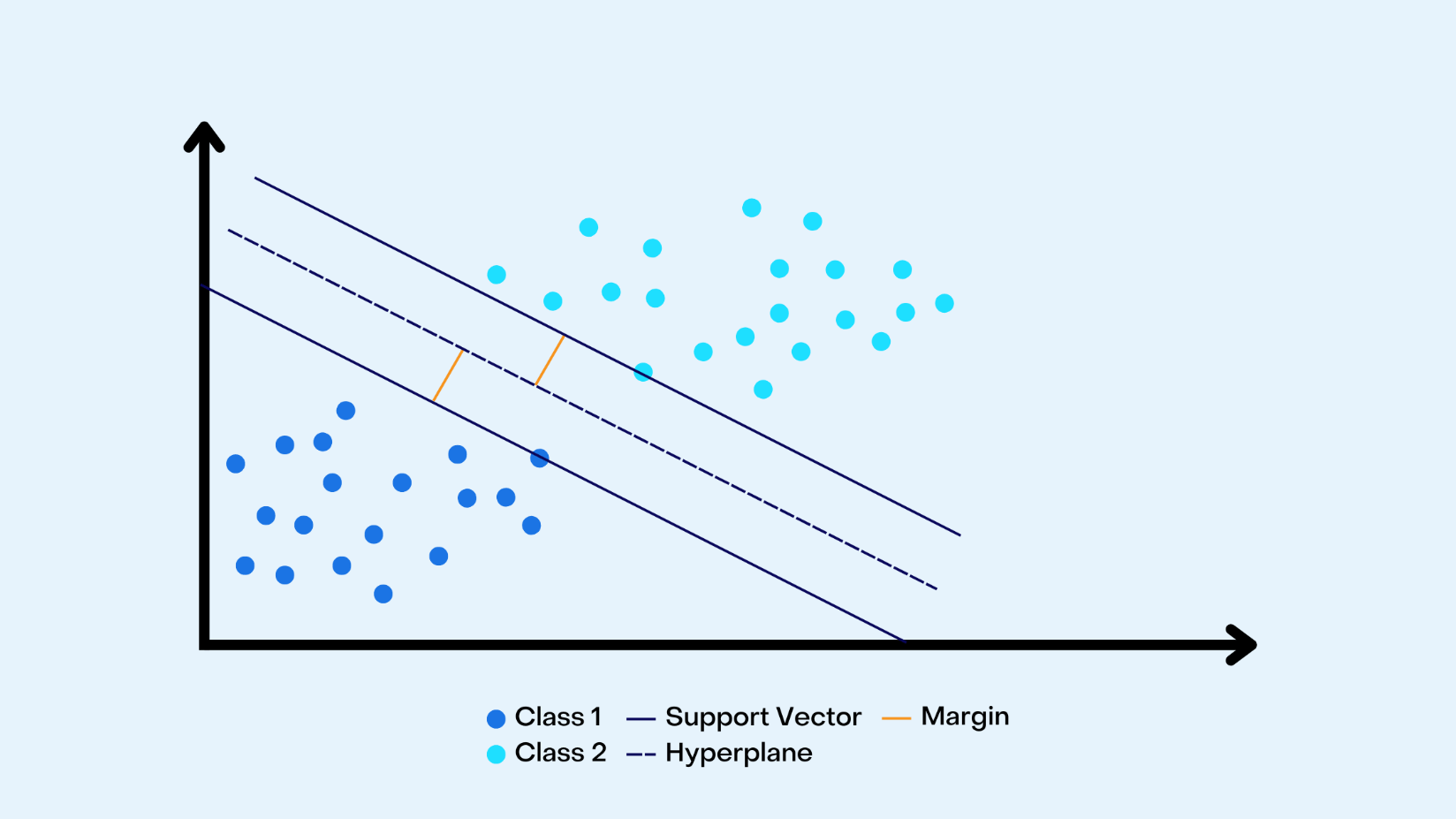

The SVM algorithm separates data into classes using a hyperplane. The hyperplane maximizes the distance between data points, referred to as the margin, of different classes. Support vectors are the data points closest to the hyperplane. If these data points are removed the position of the hyperplane is changed.

Algorithm Implementation with Code

Importing Libraries

For this example, we use scikit-learn, a popular machine learning library in python. Scikit-learn is a useful tool for creating datasets, training and testing algorithms, and much more. You must import all necessary libraries beforehand.

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt #plots

%matplotlib inline

from sklearn.datasets import load_iris #dataset

from sklearn.model_selection import train_test_split #Splitting the data into testing and training data sets

from sklearn.preprocessing import StandardScaler #Standardize variables

from sklearn.svm import SVC #SVM Algorithm

from sklearn.metrics import classification_report,confusion_matrix,accuracy_score #Predictions and evaluations

Dataset

To create the dataset, we use load_iris. The iris dataset is a popular dataset used in machine learning. It contains information about 50 observations on four different variables: Petal Length, Petal Width, Sepal Length, and Sepal Width.

# load the iris dataset

iris = load_iris()

# store the feature matrix (X) and response vector (y)

X = iris.data

y = iris.target

Test Train Split

In this section, we split the dataset into training data and testing data. The objective is to train the algorithm to predict a value of y based on its associated X values. This allows us to test the performance of the algorithm against testing data by comparing the predicted values with the actual value.

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.3, random_state = 0)

The next step is to create an SVM object, fit it to the training data and generate predictions based on the test data.

svc = SVC() #Support Vector Classifier

svc.fit(X_train,y_train)

pred = svc.predict(X_test)

Evaluation

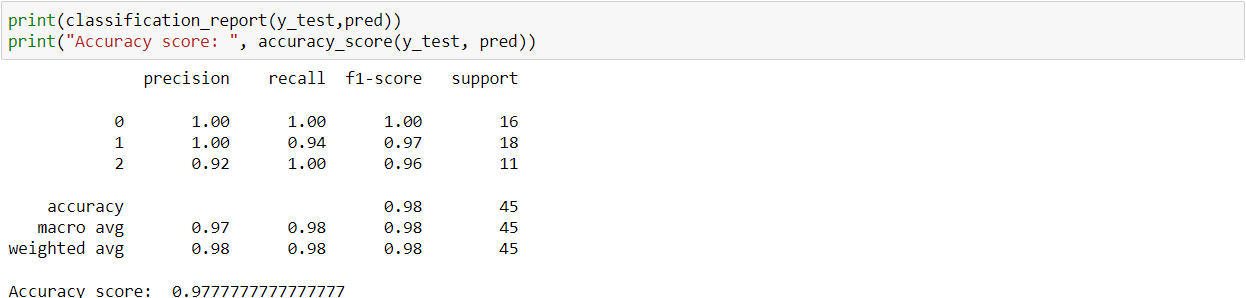

Finally, we evaluate the predictions using a classification_report, which is used to measure the performance of a classification algorithm.

print(classification_report(y_test,pred))

print("Accuracy score: ", accuracy_score(y_test, pred))

The resulting accuracy value is 0.98. In business applications, you would use datasets generated from various business processes. However, the basic process of using the algorithm remains the same.

Tags: Supervised, Linear, Parametric, Non-Probabilistic, Primal, Dual, Kernel Trick, Close-Form

AIPI3’s ML platform uses many innovative machine learning algorithms to create value for businesses. Our platform is driven by artificial intelligence & machine learning experts with extensive experience across a wide range of industries, specializations, and applications.

Get in touch with AIPI3 to discover how we can assist you!